Introduction Part 1

You can access the slides for this lecture here.

Chip Multiprocessors

Below is an abstract (and over simplified) model of how architecture courses focusing on single core CPUs see a processor:

In the core of the processor we have an arithmetic logic unit (ALU) that performs the computations. We also have a set of registers that can hold the instructions' operands, as well as some additional control logic. There is also an on-chip cache holding recently accessed data and instructions. The processor fetches data and instruction from the main memory off chip.

In a chip multiprocessor, also called multicore processor, you have several instances of the core of the processor on a single chip:

In this example we have a dual-core. Each core is a duplicate of most of we will find in a single core CPU: ALU, registers, caches, etc. While a single core processor can only execute one instruction at a time, a multicore can execute n instructions at the same time

An interesting question to ask is: why were these chip multiprocessors invented?

Here are some pictures of transistors:

From a very simplistic point of view we can see these as ON/OFF switches that sometimes let the current flow and sometimes not. They are the basic block with which we construct the logic gates that are making the modern integrated circuits used in processors. Broadly speaking, the computing power of a CPU is function of the number of transistors it integrates.

if we consider the first processor commercialised, in 1971, it had in the order of thousands of transistors:

A few years later processors were made of tens of thousands of transistors. A few years later it was hundreds of thousands, and since the 2000s we are talking about millions of processors. Since that time processors also start to have multiple compute unites or cores. Before that they had only one.

Fast-forward closer to today we now have tens of billions of transistors in a single chip:

They also commonly integrate several compute units. The Intel i7 from 2011 has 6 cores. The Qualcomm Snapdragon chip is an embedded processor with 4 ARM64 cores. And a recent server processor with 64 cores. Why did processor started to have more and more cores? Why this increase in number of compute units (cores) per chip?

Core Count Increase

For over 40 years we have seen a continual increase in the power of processors. This has been driven primarily by improvements in silicon integrated circuit technology: circuits continue to integrate more and more transistors. Until the early 2000s, this translated into a direct increase of the single core CPU clock frequency, i.e. speed: transistors are smaller, we can pack more on a chip but they consume less, so they can be clocked faster. It was a good time to be a software programmer: if a program was too slow, just wait for the next generation of computers to get a speed boost.

But the basic circuit speed increase become limited. For power consumption and heat dissipation reasons, the clock frequency of CPUs has not seen any significant improvement since the mid 2000s. Other architectural approaches to increase single processor speed have also been exhausted.

Still, the amount of transistors that can be integrated in a single chip continued to increase. So, if the speed of a single processor cannot increase, and we can still put more transistor on a chip, the solution is to put more processors on a single chip, and try to have them work together somehow.

Say we want to create a dual-core processor. Will it be twice as fast as the single core version? It's not that simple and has several implications that we will cover in this course. First, in terms of hardware, what processor or what processor(s) to put on the multicore? How to connect them? How do they access memory? Second, from the software point of view, how can we program this multicore processor? Can we use the same programming paradigms and languages we used for single core CPUs? How can we express the parallelism that is inherent to chip multiprocessors?

The terms core and processor will be used interchangeably in the rest of this course

Moore's Law

This is not really a law, more an observation/prediction, made by an engineer named Gordon Moore. It states that the transistor count on integrated circuits doubles every 18 months.

The transistor count on a chip depends on two factors: the transistor size and the chip size. Consider the evolution of the feature size (basically transistor size) between the Intel 386 from 1985 and the Apple M1 Max from 2021:

| CPU | Feature (transistor) size | Die (chip) size | Transistors integrated |

|---|---|---|---|

| Intel 386 (1985) | 1.5 μm | 100 mm2 | 275,000 |

| Apple M1 Max (2021) | 5 nm | 420.2 mm2 | 57,000,000,000 |

As one can observe the size of a transistor saw a 300x decrease, while the size of the chip itself, only went down by 4x over the same period of time. We can conclude that the increase in transistors/chip is mostly due to transistor size reduction.

Smaller Used to Mean Faster, but not Anymore

Why do smaller transistor translate in faster circuits? From a high level point of view, due to their electric properties, smaller transistors have a faster switch delay. This means that they can be clocked with a higher frequency, making the overall circuit faster. This was the case in the good old days when clock frequency kept increasing, however that trend stopped in the early/mid 2000s: the transistors became so tightly packed together on the chip that power density and cooling became problematic: clocking them with too high of a frequency would basically melt the chip.

The End of Dennard Scaling and Single Core Performance Increase

Dennard Scaling was this law from a 1974 paper stating that, based on the electric properties of transistors, as they grew smaller and we packed more on the same chip, they consumed less power, so the power density stayed constant. At the time experts concluded that power consumption/heating would not be an issue as transistors became smaller and more tightly integrated.

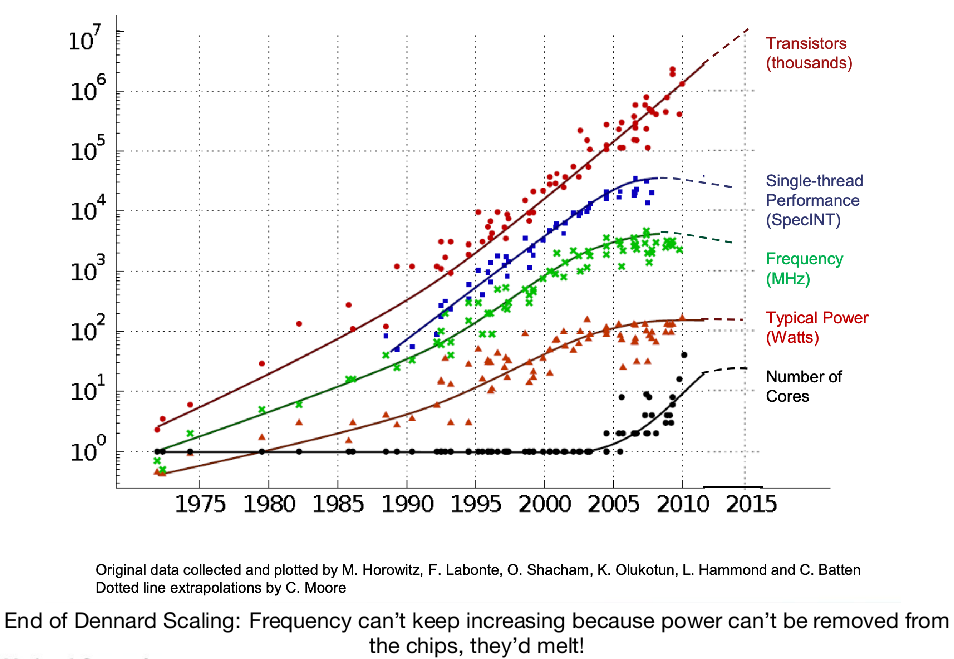

This law broke down in the mid-2000s, mostly due to the high current leakage coming with smaller transistor sizes. This is illustrated on this graph:

As one can see both the power and the frequency hit a plateau around that time, and single thread performance does to, proportionally. The number of transistors integrated keeps increasing, though.

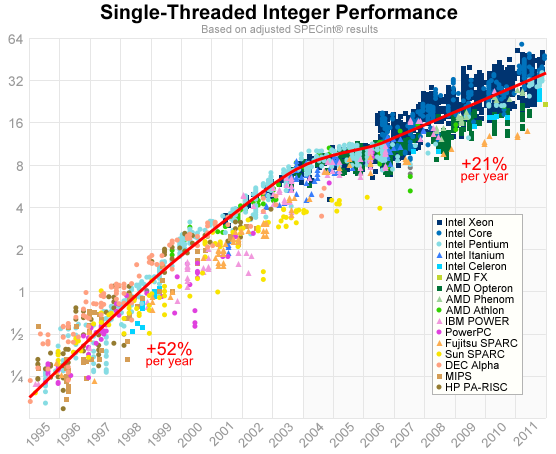

Here is another view on the issue, if you look at single threaded integer performance:

These numbers come from a standard benchmark named SPEC. As one can see the increase in performance has been more than divided by two. And if there is still some degree of increase, it does not come from frequency but from other improvements such as bigger caches or deeper / more parallel pipelines

Attempts at Increasing Single Core Performance

At that point we cannot increase the clock frequency, but we can still integrate more and more transistor in a chip. Is it possible to use these extra transistors to increase single core performance? It is indeed possible, although the solutions quickly showed limitations. Building several parallel pipelines was explored, to exploit Instruction Level Parallelism (ILP). However, ILP has diminished returns beyond ~4 pipelines. Another solution in to integrate bigger caches, but it becomes hard to get benefits past a certain size. In conclusion, efforts at increasing single core performance were quickly exhausted in the mid 2000s.

The "Solution": Multiple Cores

In the context previously described, an intuitive solution was to put multiple CPUs (cores) on a single integrated circuit (chip), named "multicore chip" or "chip multiprocessor", and to use these CPUs in parallel somehow to achieve higher performance. From the hardware point of view this represents a simpler to design vs. increasingly complex single core CPUs. But an important consideration is also that these processors cannot be programmed in the exact same way single core CPUs used to be programmed.

Multicore "Roadmap"

Below is an evolution (and projection) with time of the amount of cores per chip, as well as the feature size:

| Date | Number of cores | Feature size |

|---|---|---|

| 2006 | ~2 | 65 nm |

| 2008 | ~4 | 45 nm |

| 2010 | ~8 | 33 nm |

| 2012 | ~16 | 23 nm |

| 2014 | ~32 | 16 nm |

| 2016 | ~64 | 12 nm |

| 2018 | ~128 | 8 nm |

| 2020 | ~256 | 6 nm |

| 2022 | ~512 | 4 nm |

| 2024 | ~1024 | 3 nm |

| 2026 | ~2048 | 2 nm |

| scale discontinuity? | ||

| 2028 | ~4096 | |

| 2030 | ~8192 | |

| 2032 | ~16384 |

As one can see we went from the first dual cores in the 2000s to tens and even hundreds of cores today. This has been achieved with the help of a significant reduction in feature size, however it is unclear if this trend can continue as it becomes harder and harder to construct smaller processors.

Introduction Part 2

You can access the slides for this lecture here.

We have seen that due to several factors, in particular the clock frequency hitting a plateau in the mid 2000s, single core CPU performance could not increase anymore. As a result CPU manufacturers started to integrate multiple cores on a single chip, creating chip multiprocessors or multicores. Here we will discuss how to exploit the parallelism offered by these CPUs, and also give an overview of the course unit.

How to Use Multiple Cores?

How to leverage multiple cores? Of course one can run 2 separate programs, each running in a different process, on two different cores in parallel. This is fine, and these two programs can be old software originally written for single core processor, they don't need to be ported. But the real difficulty is when we want increased performance for a single application. So we need a collection of execution flows, such as threads, all working together to solve a given problem. Both cases are illustrated below:

Instruction- vs. Thread-Level Parallelism

Instruction-Level Parallelism. One way to exploit parallelism is through the use of instruction level parallelism (ILP). How does it work? Imagine we have a sequential program composed of a series of instructions to be executed one after the other. The compiler will take this program and is able to determine what instructions can be executed in parallel on multiple cores. Sometimes instructions can be even executed out of order, as long as there is no dependencies between them. This is illustrated below:

ILP is very practical because we just have to recompile the program, there is no need to modify the program i.e. no effort from the application programmer. However the amount of parallelism we can extract with such techniques is very limited, due to the dependencies between instructions.

Thread-Level Parallelism (TLP). Another way to exploit parallelism is to mostly rewrite your application with parallelism in mind. The programmer divides the program into (long) sequences of instructions that are executed concurrently, with some running in parallel on different cores:

The program executing is still represented as a process, and the sequences of instructions running concurrently are named threads. Now because of scheduling we do not have control over the order in which most of the threads' operations are realised, so for the computations to be meaningful the threads need to synchronise somehow.

Another important and related issue, is how to share data between threads. Threads belonging to the same program execute within a single process and they share an address space, i.e. they will read the same data at a given virtual address in memory. What happens if a thread reads a variable currently being written by another thread? What happen if two threads try to write the same variable at the same time? Obviously we don't want these things to happen and shared data access need to be properly synchronised: threads need to make sure they don't step on each other's feet when accessing shared data.

A set of threads can run concurrently on a single core. Thy will time-share the core, i.e. their execution will be interlaced and the total execution time for the set of threads will be the sum of each thread execution time. On a multicore processor, threads can run in parallel on different cores, and ideally the total execution time would be the time of the sequential version divided by the number of threads.

So contrary to ILP which is limited, TLP can really help to fully exploit the parallelism brought by multiple cores. However that means rewriting the application to use threads so there is some effort required from the application programmer. The application itself also needs to be suitable for being divided into several execution flows.

Data Parallelism

The data manipulated by certain programs, as well as the way it is manipulated, is very well-fitted for parallelism. For example it is the case with applications doing computations on single and multi-dimensional arrays. Here we have a matrix-matrix addition, where each element of the result matrix can be computed in parallel. This is called data parallelism. Many applications exploiting data parallelism perform the same or a very similar operation on all elements of a data set (an array, a matrix, etc.). In this example, where two matrices are summed, each thread (represented by a color) sums the two corresponding members of the operand matrices, and all of these sums can be done in parallel:

A few examples of application domains that lend themselves well to data parallelism are matrix/array operations (extensively used in AI applications), Fourier Transform, a lot of graphical computations like filters, anti-aliasing, texture mapping, light and shadow computations, etc. Differential equations applications are also good candidates, and these are extensively used in domains such as weather forecasting, engineering simulations, and financial modelling.

Complexity of Parallelism

Parallel programming is generally considered to be difficult, but depends a lot on the program structure:

In scenarios where all the parallel execution flows, let's say threads, are doing the same thing, and they don't share much data, then it can be quite straightforward. On the other end, in situations where all the threads are doing something different, or when they share a lot of data in write mode, when they communicate a lot and need to synchronise, then such programs can be quite hard to reason about, to develop, and to debug.

Chip Multiprocessor Considerations

Here are a few considerations with chip multiprocessors that we will cover in this course unit. First how should the hardware, the chip itself, be built. When we have multiple cores, how are they connected together? How are they connected to memory? Are they supposed to be used for particular programming patters such as data parallelism? or multithreading? If we want to build a multicore, should we use a lot of simple cores or just a few complex cores? Should the processor be general purpose, or specialised towards particular workloads?

We also have problematics regarding software, i.e. how to program these chip multiprocessors. Can we use a conventional programming language? Possibly an extended version of a popular language? Should we rather use a specific language, or a totally new approach?

Overview of Lectures

Beyond this introduction, we will cover in this course unit the following topics:

- Thread-based programming, thread synchronisation

- Cache coherency in homogeneous shared memory multiprocessors

- Memory consistency

- Hardware support for thread synchronisation

- Operating system support for threads, concurrency within the kernel

- Alternative programming views

- Speculation and transactional memory

- Heterogeneous processors/cores and programs

- Radical approaches (e.g. dataflow programming)

Shared Memory Programming

You can access the slides for this lecture here. All the code samples given here can be found online, alongside instructions on how to bring up the proper environment to build and execute them here.

Processes and Address Spaces

The process is the basic unit of execution for a program on top of an operating system: each program runs within its own process. A process executing on the CPU accesses memory with load and store instructions, indexing the memory with addresses. In the vast majority of modern processors virtual memory is enabled very early at boot time, and from that point every load/store instruction executed by the CPU targets a virtual address.

With virtual memory, the OS gives to each process the illusion that it can access all the memory.

The set of addresses the CPU can index with load/store instructions when running a given process is called the virtual address space (abbreviated as address space from now on).

It ranges from address 0 all the way to what the width of the address bus lets the CPU address, generally 48 bits (256 TB).

This (very large) size for the virtual address space of each process is unrelated to the amount of RAM in the computer: in practice the address space is very sparse and most of it is not actually mapped to physical memory.

On this example we have two address spaces (i.e. 2 processes), and both see something different in memory at address 0x42:

This is because through virtual memory, the address 0x42 is mapped to different locations in physical memory.

This mapping is achieved through the page table.

Each page table defines a unique virtual address space, and as such there is one page table per process.

This also allows to make sure processes cannot address anything outside their address space.

To leverage parallelism, two processes, i.e. two programs, can run on two different cores of a multicore processor. However, how can we use parallelism within the same program?

Threads

A thread is a flow of control executing a program It is a sequence of instructions executed on a CPU core A process can consist of one or multiple threads In other words, we can have for a single program several execution flows running on different cores and sharing a single address space.

In the example below we have two processes: A and B, each with its own address space:

In our example process A runs 3 threads, and they all see the green data.

Process B has 4 threads, and they see the same orange data.

A cannot access the orange data, and B cannot access the green data, because they run in disjoint address spaces.

However, all threads in A can access the green data, and all threads in B can access the orange data.

For example, if two threads in B read the memory at the address pointed by the red arrows, they will see the same value: x: threads communicate using shared memory, by accessing a common address space.

Seeing the same address space is very convenient for communications, that can be achieved through global variables or pointers to anywhere in the address space.

One can program with threads in various languages:

- C/C++/Fortran – using the POSIX threads (Pthread) library

- Java

- Many other languages: Python, C#, Haskell, Rust, etc.

Threads in C/C++ with Pthread

Pthread is the POSIX thread library available for C/C++ programs.

We use the pthread_create function to create and launch a thread.

It takes as parameter the function to run and optionally its arguments.

In the illustration below we have time flowing downwards.

The main thread of a process (that is automatically created by the OS when the program is started) calls pthread_create to create a child thread.

Children threads can use pthread_exit to stop their execution, and parent threads can call pthread_join to wait for another thread to finish:

A good chunk of this course unit, including lab exercises 1 and 2, will focus on shared memory programming in C/C++ with pthreads.

Use the Linux manual pages for the different pthread functions we'll cover (man pthread_*) and Google “pthreads” for lots of documentation.

In particular see the Oracle Multithreaded Programming Guide.

Here is an example of a simple pthread program:

#include <stdio.h>

#include <stdlib.h>

#include <pthread.h>

#define NOWORKERS 5

// Function executed by all threads

void *thread_fn(void *arg) {

int id = (int)(long)arg;

printf("Thread %d running\n", id);

pthread_exit(NULL); // exit

// never reached

}

int main(void) {

// Each thread is controlled through a pthread_t data structure

pthread_t workers[NOWORKERS];

// Create and launch the threads

for(int i=0; i<NOWORKERS; i++)

if(pthread_create(&workers[i], NULL, thread_fn, (void *)(long)i)) {

perror("pthread_create");

return -1;

}

// Wait for threads to finish

for (int i = 0; i < NOWORKERS; i++)

if(pthread_join(workers[i], NULL)) {

perror("pthread_join");

return -1;

}

printf("All done\n");

}

This program creates 5 children threads which all print a message on the standard output then exit. The main thread waits for the 5 children to finish, and exit. This is all done using the functions we just covered.

Notice how an integer is passed as parameter to each thread's function: it is cast as a void * because this is what pthread_create expects (in case we want to pass something larger we would put a pointer to a larger data structure here).

That value is passed as the parameter arg to the thread's function.

In our example we cast it back to an int.

We use the threads' parameters to uniquely identify each thread with an id.

Assuming the source code is present in a source file named pthread.c, you can compile and run it as follows:

gcc pthread.c -o pthread -lpthread

./pthread

Threads in Java

There are two ways of defining a thread in Java:

- Creating a class that inherits from

java.lang.Thread. - Creating a class that implements

java.lang.Runnable.

The reason to be for the second approach is that it lets you inherit from something else than Thread, as multiple inheritance is not supported in Java.

With both approaches, one need to implement the run method to define what the thread does when it starts running.

From the parent, Thread.start start the execution of the thread, and Thread.join waits for a child to complete its execution.

Below is an example of a Java program with the exact same behaviour as our pthread C example, using the first approach (inheriting from java.lang.Thread):

class MyThread extends Thread {

int id;

MyThread(int id) { this.id = id; }

public void run() { System.out.println("Thread " + id + " running"); }

}

class Demo {

public static void main(String[] args) {

int NOWORKERS = 5;

MyThread[] threads = new MyThread[NOWORKERS];

for (int i = 0; i < NOWORKERS; i++)

threads[i] = new MyThread(i);

for (int i = 0; i < NOWORKERS; i++)

threads[i].start();

for (int i = 0; i < NOWORKERS; i++)

try {

threads[i].join();

} catch (InterruptedException e) { /* do nothing */ }

System.out.println("All done");

}

}

This example defines the MyThread class inheriting from Thread.

In the constructor MyThread we just initialise an identifier integer member variable id.

We implement the run method, it just prints the fact that the thread is running as well as its id.

In the main function we create an array of MyThread objects, one per thread we want to create.

Each is given a different id.

Then we launch them all in a loop with start, and we wait for each to finish with join.

We can compile and run it as follows:

javac java-thread.java

java Demo

The second approach to threads in Java, i.e. implementing the Runnable interface, is illustrated in the program below:

class MyRunnable implements Runnable {

int id;

MyRunnable(int id) { this.id = id; }

public void run() { System.out.println("Thread " + id + " running"); }

}

class Demo {

public static void main(String[] args) {

int NOWORKERS = 5;

Thread[] threads = new Thread[NOWORKERS];

for (int i = 0; i < NOWORKERS; i++) {

MyRunnable r = new MyRunnable(i);

threads[i] = new Thread(r);

}

for (int i = 0; i < NOWORKERS; i++)

threads[i].start();

for (int i = 0; i < NOWORKERS; i++)

try {

threads[i].join();

} catch (InterruptedException e) { /* do nothing */ }

System.out.println("All done");

}

}

Here we create a class MyRunnable implementing the interface in question.

For each thread we create a Thread object and we pass to the constructor a MyRunnable object instance.

Then we can call start and join on the thread objects.

Output of Our Example Programs

For both Java and C examples, an example output is:

Thread 1 running

Thread 0 running

Thread 2 running

Thread 4 running

Thread 3 running

All done

One can notice that over multiple execution, the same program will yield a different order. This illustrates the fact that without any form of synchronisation, the programmer has no control over the order of execution.: the OS scheduler decides, and it is nondeterministic. A possible scheduling scenario is:

Another possible scenario assuming a single core processor:

Of course this lack of control over the order of execution can be problematic in some situations where we really need a particular sequencing of certain thread operations, for example when a thread needs to accomplish a certain task before another thread can start doing its job. Later in the course unit we will see how to use synchronisation mechanisms to manage this.

Data Parallelism

As we saw previously, data parallelism is a relatively simple form of parallelism found in many applications such as computational science. There is data parallelism when we have some data structured in such a way that the operations to be performed on it can easily be parralelised. This is very suitable for parallelism, the goal is to divide the computations into chunks, computed in parallel.

Take for example the operation of summing two arrays, i.e. we sum each element of same index in A and B and put the result in C:

We can have a different thread realise each addition, or fewer threads each taking care of a subset of the additions. This type of parallelism is exploited in vector and array processors. General purpose CPU architectures have vector instructions extensions allowing things like applying the same operation on all elements of an array. For example Intel x86-64 use to have SSE and now AVX. From a very high level perspective, GPUs also work in that way.

Let's see a second example more in details, matrix-matrix multiplication. We'll use square matrices for the sake of simplicity. Recall that with matrix multiplication, each element of the result matrix is computed as the sum of the multiplication of the elements in one column of the first matrix with the element in one line of the second matrix:

How can we parallelise this operation? We can have 1 thread per element of the result matrix, each thread computing the value of the element in question With a n x n matrix that gives us n2 threads:

If we don't have a lot of cores we may want to create fewer threads, it is generally not very efficient to have more threads than cores So another strategy is to have a thread per row or per column of the result matrix:

- Finally, we can also create an arbitrary number of threads by dividing the number of elements of the result matrix by the number of threads that we want and have each thread take care of a subset of the elements:

Given all these strategies, there are two important questions with respect to the amount of effort/expertise required from the programmer:

- What is the best strategy to choose according to the situation? Does the programmer need to be an expert to perform this choice?

- How does the programmer indicate in the code the strategy to use? Is there a lot of code to add to a non-parallel version of the code? If we want to change strategy, do we have to rewrite most of the program?

The programmer's effort should in general be minimised if we want a particular parallel framework, programming language or style of programming to become popular/widespread. But of course it also depends on the performance gained from parallelisation Maybe it's okay to rewrite entirely an application with a given paradigm/language/framework if it results in a 10x speedup.

Implicit vs. Explicit Parallelism

Here is an example of C/C++ parallelisation framework called OpenMP:

#include <stdio.h>

#include <stdlib.h>

#include <omp.h>

#define N 1000

int A[N][N];

int B[N][N];

int C[N][N];

int main(int argc, char **argv) {

for(int i=0; i<N; i++)

for(int j=0; j<N; j++) {

A[i][j] = rand()%100;

B[i][j] = rand()%100;

}

#pragma omp parallel

{

for(int i=0; i<N; i++) // this loop is parallelized automatically

for(int j=0; j<N; j++) {

C[i][j] = 0;

for(int k=0; k<N; k++)

C[i][j] = C[i][j] + A[i][k] * B[k][j];

}

}

printf("matrix multiplication done\n");

return 0;

}

We can parallelise the matrix multiplication operation in a very simple way by adding a simple annotation in the code: #pragma omp parallel.

All the iterations of the first outer loop will then be done in parallel by different threads.

The programmer's effort is minimal: the framework is taking care of managing the threads, and also to spawn the ideal number of threads according to the machine executing the program.

We will cover OpenMP in more details later in this course unit.

To compile and run this program, assuming the source code is in a file named openmp.c:

gcc openmp.c -fopenmp -o openmp

./openmp

This notion of programmer's effort is linked to the concepts of explicit and implicit parallelism. With explicit parallelism, the programmer has to write/modify the application's code to indicate what should be done in parallel and what should be done sequentially. This ranges from writing all the threads' code manually, or just putting some annotations in sequential programs (e.g. our example with OpenMP). On the other hand, we have implicit parallelism, which requires absolutely no effort from the programmer: the system works out parallelism by itself. This is achieved for example by some languages able to make strong assumption about data sharing, for example pure functions in functional languages have no side effects so they can run in parallel.

Example Code for Implicit Parallelism

Here are a few examples of implicit parallelism. Languages like Fortran allow expression on arrays, and some of these operations will be automatically parralelised, for example summing all elements of two arrays:

A = B + C

If we have pure functions (functions that do not update anything but local variables), e.g. with a functional programming language, these functions can be executed in parallel.

In these examples the compiler can run f and g in parallel:

y = f(x) + g(z)

Another example:

p = h(f(x),g(z))

Automatic Parallelisation

In an ideal world, the compiler would take an ordinary sequential program and derive the parallelism automatically. Implicit parallelism is the best type of parallelism from the engineering effort point of view, because the programmer does not have to do anything. If we have a sequential program it would be great if the compiler can automatically extract all the parallelism. There was a lot of effort invested in such technologies at the time of the first parallel machines, before the multicore era. It works well on small programs but in the general case, analysing dependencies in order to define what can be done in parallel and what needs to be done sequentially becomes very hard. And of course the compiler needs to be conservative not to break the program, i.e. if it is not 100% sure that two steps can be run in parallel they need to run sequentially. So overall performance gains through implicit parallelism are quite limited, and to get major gains one need to go the explicit way.

Example Problems for Parallelisation

Below are a few examples of dependencies that a compiler may face when trying to extract parallelism automatically. They all regard parallelising all of some iterations of a loop.

Case 1. Here, in the first loop, 3 slots after the index computed at each iteration, we have a read dependency:

for (int i = 0 ; i < n-3 ; i++) {

a[i] = a[i+3] + b[i] ; // at iteration i, read dependency with index i+3

}

Case 2. Here we have another read dependency, this time 5 slots before the index computed at each iteration:

for (int i = 5 ; i < n ; i++) {

a[i] += a[i-5] * 2 ; // at iteration i, read dependency with index i-5

}

Case 3. Here, still a read dependency, and we don't know if the slot read is before or after the one being computed:

for (int i = 0 ; i < n ; i++) {

a[i] = a[i + j] + 1 ; // at iteration i, read dependency with index ???

}

Automatic Parallelisation

Let's consider case 1 above. We can illustrate the data dependency over a few iterations of the loop as follows:

If we parallelise naively and run each iteration in parallel, there is a chance for e.g. iteration 3 to finish before iteration 0, which would break the program. We can observe that the dependency has a positive offset: we read at each iteration what was in the array before the loop started. We are never supposed to read a value computed by the loop itself. So the solution to parallelise this loop is to internally make a new version of the array and read from an unmodified copy:

parrallel_for(int i=0; i<n-3; i++)

new_a[i] = a[i+3] + b[i];

a = new_a;

If we consider case 2 above, the trick we just presented does not work. Let's illustrate the dependency:

Because what we read at iteration i is supposed to have been written 5 iteration before, we can't rely on a read-only copy

Also, parallelising all iterations will break the program.

We observe that at a given time, there is no dependency between sets of 5 iterations, (e.g. iterations 5-9 or iterations 10-14).

The solution here is thus to limit the parallelism to 5.

Concerning case 3 above, because of the way the code is structured, it is not possible to automatically parallelise the loop.

Shared Memory

Everything in this lecture has been said on the basis that threads share memory In other words they can all access the same memory, and they will all see the same data at a given address Without shared memory, for example when the concurrent execution flows composing a parallel application run on separate machines, these execution flows have to communicate via messages, more like a distributed system.

Shared Memory Multiprocessors

You can access the slides for this lecture here.

We have previously introduced how to program with threads that share memory for communication. Here we will talk about how the hardware is set up to ensure that threads running on different cores can share memory by seeing a common address space. In particular, we will introduce the issue of cache coherency on multicore processor.

Multiprocessor Structure

The majority of general purpose multiprocessors are shared memory. In this model all the cores have a unified view on memory, e.g. in the figure on the top of the example below they can all read and write the data at address x in a coherent way. This is by opposition to distributed memory systems where each core or processor has its own local memory, and does not necessarily have a direct and coherent access to other processor's memory.

Shared memory multiprocessors are dominant because they are easier to program. However, shared memory hardware is usually more complex. In particular, each core on a shared memory multiprocessor has its own local cache, Here we will introduce the problem of cache coherency in shared memory multiprocessor systems. In the next lecture we'll see how it is managed concretely.

Caches

A high performance uniprocessor has the following structure:

Main memory is far too slow to keep up with modern processor speed it can take up to hundreds of cycles to access, versus the CPU registers that are accessed instantaneously/ So another type of on-chip memory is introduced, the cache. It is much faster than main memory, being accessed in a few cycles. It is also expensive, so its size is relatively small, and thus the cache is used to maintain a subset of the program data and instructions.

The cache can have multiple levels: generally in multiprocessors we have a level 1 and sometimes level 2 caches that are local to each core, and a shared last level cache.

If an entire program data-set can fit in the cache, the CPU can run at full speed. However, it is rarely the case on modern applications and new data/instructions needed by the program have to be fetched from memory (on each cache miss). Also, newly written data in cache must eventually be written back to main memory.

The Cache Coherency Problem

With just one CPU things are simple, data just written to the cache can be read correctly whether or not it has been written to memory. But things get more complicated when we have multiple processors. Indeed, several CPUs may share data, i.e. one can write a value that the other needs to read. How does that work with the cache?

Consider the following situation, illustrated on the schema below.

We have a dual-core with CPU A and B, and some data x in RAM.

CPU A first reads it, then updates it in its own cache into x'.

Then later we have CPU B that wishes to read the same data.

It's not in its cache, so it fetches it from memory, and ends up reading the old value x.

Clearly that is not OK: A and B expect to share memory and to see a common address space. Threads use shared memory for communications and after some data, e.g. a global variable, is updated by a thread running on a core, another thread running on another core expects to read the updated version of this data: cores equipped with caches must still have a coherent view on memory, this is the cache coherency problem.

The Cache Coherency Problem

An apparently obvious solution would be to ensure that every write in the cache is directly propagated to memory. It is called a write-through cache policy:

However, this would mean that every time we write we need to write to memory. And every time we read we also need to fetch from memory in case the data was updated. This is very slow and negates the cache benefits, thus it's not a good idea.

The Cache Coherency Problem

So how can we overcome these issues? Can we communicate cache-to-cache rather than always go through memory? In other words, when a new value is written in one cache, all other values somehow located in other caches somehow would need to be either updated or invalidated. Another issue is: what if two processors try to write to the same location. In other words how to avoid having two separate cache copies? This is what we refer to by cache coherency. So things are getting complex, and we need to develop a model. How to efficiently achieve cache coherency in a shared memory multiprocessor is the topic of the next lecture.

Cache Coherence in Multiprocessors

You can access the slides for this lecture here.

We have previously introduced the issue of cache coherence in multiprocessors. The problem is that with a multiprocessor each core has a local cache, and data in that cache may not be in sync with memory. We need to avoid situations where two cores have multiple copies of the same data with different values for that data. If we try to naively use in a multicore a traditional cache system (as used in single core CPUs), the following can happen:

- At first we have the data

xin memory and core A reads it then updates it tox'. For performance reasons A does not write the data in memory yet. - Later, B wants to read the data.

It's not in B's cache so it fetched it from memory: B reads

xwhich is not the last version of the data.

This of course breaks the program. So we need to define a protocol to make sure that all caches have a coherent view on memory. This involves cache to cache communication: for performance reasons, we want to avoid involving memory as much as we can.

Coping with Multiple Cores

Here we will cover a simple protocol named bus-based coherence or bus snooping. All the cores are interconnected with a bus that is also linked to memory:

Each core has have some special cache management hardware. This hardware can observe all the transactions on the bus and it is also able to modify the cache content independently of the core. With this hardware, when a given cache observes pertinent transactions on the bus, it can take appropriate actions Another way to look at this is that a cache can send messages to other caches, and receive messages from other caches.

Cache States, MSI Protocol

On the hardware we described we'll present now a cache coherence protocol, i.e. a way for the caches to exchange messages in order to maintain a coherent view on the memory's content for all cores.

Recall that caches hold data (and read/write it from/to memory) at the granularity of a cache line (generally 64 byte). Each cache has 2 control bits for each line it contains, encoding the state the line currently is in:

Each line can be can be in one of three different states:

- Modified state: the cache line is valid and has been written to but the latest

values have not been updated in memory yet

- A line can be in the modified state in at most 1 core

- Invalid: there may be an address match on this line but the data is

not valid

- We must go to memory and fetch it or get it from another cache

- Shared: implicit 3rd state, not invalid and not modified

- A valid cache entry exists and the line has the same values as main memory

- Several caches can have the same line in that state

These states can be illustrated as follows:

The Modified/Shared/Invalid states, as well as the transitions we'll describe next, define the MSI protocol.

Possible States for a Dual-Core CPU

Let's describe the MSI protocol on a dual core processor for the sake of simplicity. For a given cache line, we have the following possible states:

The different combinations of states on the dual core are as follows:

- (a) modified-invalid: one cache has the line in the modified state, i.e. the data in there is valid and not in sync with memory, and the other cache has the line in the invalid state.

- (b) invalid-invalid: we have the line invalid in both caches.

- (c) invalid-shared: the line is invalid in one cache, and shared (i.e. valid and in sync with memory) in the other cache.

- (d) shared-shared: the line is valid and in sync with memory in both caches.

By symmetry we also have (a') invalid-modified as well as (c') shared-invalid.

Recall that by definition if a cache has the data in the modified state, that cache should be the only one with a valid copy of the line in question, hence the states modified-shared and modified-modified, which break that rule, are not possible.

State Transitions

After we listed all the possible legal states, let's see now, for each state, how read and write operations on each of the two cores affect the state of the dual core. This regards 3 aspects:

- What are the messages sent between cores: we'll see messages requesting a cache line, messaging asking a remote core to invalidate a given line, and messages asking for both a line content as well as its invalidation from a remote cache.

- When memory needs to be involved, e.g. to fetch a cache line or to write it back.

- What are the state transitions between the state combinations listed above for our dual core.

State Transitions from (a) Modified-Invalid

Let's start with the modified/invalid state. In that state core 1 has the line modified, it's valid but not in sync with memory, and core 2 has the line invalid, it's in the cache but the content is out of date.

Read or Write on Core 1. If we have either a read or a write on core 1, these are just served by the cache (cache hits), no memory operation is involved, and there is no state transition.

Read on Core 2. If there is a read on core 2, because the line is invalid in its cache it cannot be served from there. So core 2 places a read request on the bus, which gets snooped by core 1. We can have only one cache in the modified state, so with this particular protocol we are aiming at a shared-shared final state. So core 1 writes back the data to memory to have it in sync, and goes to the shared state. Core 1 also sends the line content to core 2 that switches to the shared state. We end up in the (d) shared-shared state, i.e. both caches have the line valid and in sync with memory:

Write on Core 2. In case of a write on core 2, its cache has the line invalid, so it places a read request on the bus, which is snooped by core 1. Core 1 has the data in modified state so it first writes it back to memory, and then sends the line to core 2. Core 2 updates the line so it sends an invalidate message on the bus, and core 1 switches to the invalid state. Core 2 then switches to modified. Overall the state changes to (a'): invalid/modified:

State Transitions from (b) Invalid-Invalid

One may ask in what circumstances does a dual core ends up with the same cache line in the invalid state in both caches, knowing that what triggers the invalidation of a line in the cache of a given core is a write on the other core (i.e. that other core has the data in modified). A given cache line can be invalidated in both core following a write by an external actor, e.g. a device through Direct Memory Access.

Read on Core 1 or Core 2. In that state both caches have the line but its content is out of date. If there is a read on one core, the cache in question places a read request on the bus. Nobody answers and the cache then fetch the data from memory, switches the state to shared. The system ends up in the (c') shared-invalid or (c) invalid-shared state, according to which core performed the read. For example with core 1 performing the read:

Write on core 1 or 2. If there is a write on a core, the relevant cache does not know about the status of the line in other cores so it places a read request on the bus. Nobody answers so the line is fetched from memory and the write is performed in the cache so the writing core switch the state to modified. We end up in (a) modified-invalid or (a') invalid-modified according to which core performed the write operation, e.g. if it was core 1:

State Transitions from (c) Invalid-Shared

Read on Core 1. The line is in the invalid state on that cache, so it is present but the content is out of date. The other cache has the line in the shared state so it is present, valid, and in sync with memory. In case of a read on core 1, its cache places a read request on the bus, it is snooped by cache 2 which replies with the cache line. Core 1 switches to the shared state, and the system is now in the (d) shared-shared state:

Read on Core 2. In case of a read on core 2, the read is served from the cache, non memory operation is required, and there is no state transition: the system stays in (c) invalid-shared.

Write on Core 1. Because core 1 has the line in the invalid state, it starts by placing a read request on the bus. Core 2 snoops the request and replies with the data. It's in the shared state so no need for a writeback in memory. Core 1 wants to update the line, so it places an invalidate request on the bus. Core 2 receives it and switches to invalid. Finally core 1 performs the write and switches to modified. We end up in the (a) modified-invalid state:

Write on Core 2. In the case of a write on core 2, even if nobody needs to invalidate anything core 2 does not know it, so it places an invalidate message on the bus. Afterwards it performs the write in the cache and switches to the modified state. We end up in the (a') invalid-modified state:

State Transitions from (d) Shared-Shared

Read on Core 1 or Core 2. If there is a read on any of the cores, it is a cache hit, served from the cache.

Write on Core 1 or Core 2. If there is a write on a core, the core in question places an invalidate request on the bus. The other core snoops the request and switches to invalid, it was shared so there is no need for writeback. The first core can then perform the write and switches to the modified state, we end up in the (a) modified-invalid or (a') invalid-modified state depending on which core made the update, e.g. if it was core 1:

Beyond Two Cores

The MSI protocol generalises beyond 2 cores. Because of the way the snoopy bus works, the read and invalidate messages are in effect broadcasted to all cores. Any core with a valid value (shared or modified) can reply to a read request. For example here, core 1 has the line in the invalid state and wishes to perform a read so it broadcasts a read request on the bus and one of the cores having the line in the shared state replies:

When an invalidate request is received, any core in the shared state invalidates without writeback, as it is the case for core 3 and core 4 in this example:

When an invalidate message is received, a core in the modified state writes back before invalidating, e.g. core 4 in this example:

Write-Invalidate vs. Write-Update

There are two major types of snooping protocols:

- With write-invalidate, when a core updates a cache line, other copies of that line in other caches are invalidated. Future accesses on the other copies will require fetching the updated line from memory/other caches. It is the most widespread protocol, used in MSI, but also in other protocols such as MESI and MOESI, that we will cover next.

- With write-update:, when a core updates a cache line, the modification is broadcast to copies of that line in other caches: they are updated. This leads to a higher bus traffic compared to write-invalidate. Example of write-update protocols include Dragon and Firefly.

Cache Snooping Implications on Scalability

Given our description of the way cache snooping works, when an invalidate message is sent, it is important that all cores receive the message within a single bus cycle so that they all invalidate at the same time. If this does not happen, one core may have the time to perform a write during that process, which would break consistency.

This becomes harder to achieve as we connect higher numbers of cores together into a chip multiprocessor, because the invalidate signal takes more time to propagate. With more cores the bus capacitance is also higher and the bus cycle is longer. This seriously impacts performance. So overall, a cache snooping-based coherence protocol is a major limitation to the number of cores that can be supported by a CPU.

Multi-Level Caches

Many modern processors include several levels of cache. Generally, level 1 (L1) and 2 (L2) caches are per-core caches, while the level 3 (L3) cache is shared among cores:

Check out for example the technical data for the memory hierarchy of the Zen 4 micro architecture (Ryzen 7000 x86-64 processors) here with 3 levels of cache, L1 and L2 being per core, and L3 shared.

Inclusion Policy. An important design consideration for multi-level caches is the inclusion policy. It defines the fact that higher level caches must include the content of lower level caches or not. Here we will present the simplest policy: the inclusive policy. With this policy the higher-level caches are always a superset of lower level caches. In other words, a cache line present in a lower level cache is always also present in higher level caches.

With the inclusive policy cache misses bring data in lower-level caches from higher-level caches or memory. For example here misses on cache lines A and B brings them from L3 or memory into both L1 and L2 caches:

Should the cache miss require a fetch from memory, with the inclusive policy the cache line will similarly be brought into L1, L2, and L3 caches. A miss due to a line not being in L1 but present in L2 will trigger the transfer of the line from L2 to L1:

The inclusive policy makes that capacity evictions simply consists in dropping lines from lower level caches. If we take our previous example with the L1 cache full with A, B, and C, we can evict B from the L1 cache knowing it is still present in L2:

A capacity eviction in a higher level cache will trigger the eviction from the lower-level caches too, e.g. here L2 is full and A is evicted from both L2 and L1:

MSI and Multi-Level Caches. The simple MSI protocol we presented could apply to such a multi-level cache hierarchy as follows. First, the L3 cache is shared among all cores so there is no problem of coherence here.

In case of a read/write cache miss (cache line not present in local L1/L2, or cache line present but not valid), a read request is placed on the snoopy bus and it can be served from any L1/L2 cache having a valid version of the line. If no other L1/L2 cache has the line, it can be retrieved from the L3 cache in case it is present there. If not, it can be retrieved from memory.

When a core writes in the cache, it places an invalidation message on the bus. When this message is received by the other caches, they invalidate any copy of the line that may be present at levels L1 and L2.

There are others multi-level cache inclusion policies beyond the inclusive one. A good starting point to learn more about them is here.

MESI and MOESI Cache Coherence

You can access the slides for this lecture here.

Here we will present a few optimisations implemented on top of MSI. These optimisations give us two new protocols, MESI and MOESI.

Unnecessary Communication

In a snoopy bus-based cache coherence system, the bus itself is a critical resource, as it is shared by all cores. Only one component can use the bus at a time, so unnecessary use of the bus is a waste of time and impacts performance. In some scenarios, MSI can send a lot of unnecessary requests on the bus. Take for example the following case:

One core, core 2, has the data in the shared state. And all other cores have the data in the invalid state. If there is a write to core 2 we transition from invalid-invalid-invalid-shared to invalid-invalid-invalid-modified. MSI would still broadcast an invalidate request on the bus to all cores even if it's unnecessary.

However, in another scenario, for example when all cores have the data in the shared state, and there is a write on any of these cores, the broadcast is actually needed:

The central problem is that with MSI the core that writes does not know the status of the data on the other cores so it blindly broadcast invalidate messages. How can we differentiate these cases?

Optimising for Non-Shared Values

We need to distinguish between the two shared cases:

- In the first case a cache holds the only copy of a value which is in sync with memory (in other words it is not modified).

- In the second case a cache holds a copy of the value which is in sync with memory and there are also other copies in other caches.

In the first case we do not need to send an invalidate message on write, whereas in the second an invalidate message is needed:

MESI Protocol

The unshared case (first case described above) is very common: in real application, the majority of variables are unshared (e.g. all of a thread's local variables).

The key idea with MESI is to split the shared state into two states that corresponds to the two cases we have presented:

- Exclusive, in which a cache has the only copy of the cache line (and its content is in sync with memory)

- Truly shared, in which the cache holds one of several shared copies of the cache line (and once again its content is in sync with memory)

The relevant transitions are as follows. We switch to exclusive (E) after a read caused a fetch from memory. We switch to truly shared (S) after a read that gets value from another cache. These transitions are illustrated below:

MESI is a simple extension, but it yields a significant reduction in bus usage. Therefore, in practice MESI is more widely used than MSI. We won't cover MESI in details here, however a notable point is that a cache line eviction on a remote core can cause a line in the local core being in state truly shared to be the only remaining copy. In that case we should theoretically switch to exclusive but in practice it is hard to detect, so we stay in truly shared.

MOESI Protocol

MOESI is a further optimisation in which we split the modified state in two:

- Modified is the same as before, the cache contains a copy which differs from that in memory but there are no other copies.

- Owned: the cache contains a copy which differs from that in memory and there may be copies in other caches which are in state S, these copies having the same value as that of the owner.

This is illustrated below:

The owner is the only cache that can make changes without sending an invalidate message. MOESI allows the latest version of the cache line to be shared between caches without having to write it back to memory immediately. When it writes, the owner broadcasts the changes to the other copies, without a writeback:

Only when a cache line in state owned or modified gets evicted will any write back to memory be done.

Directory-Based Cache Coherence

You can access the slides for this lecture here.

Here we will cover a cache coherence protocol that is quite different from the bus-based protocols we have seen until now. It is named directory-based coherence protocol.

Directory Based Coherence

We have seen that shared bus based coherence does not scale well to large amount of cores. This is because only one entity can use the bus at a time. Can we implement a cache coherence protocol with a less directly connected network, such as a grid, or a general packet switched network:

One possible solution is to use a directory holding information about data (i.e. cache lines) in the memory We are going to describe a simple version of this scheme.

Directory Structure

The architecture of a directory-based cache coherence system is as follows:

Each core has a local cache. They are linked together through an interconnect network, as we mentioned something less directly connected than with bus-based coherence. The memory is also connected to the network. Attached to the network is also a component named the directory. The directory contains one entry for each possible cache line, so its size depends on the amount of memory. Each entry in the directory has n present bits, n being the number of cores. If one of these bits is set, it means that the corresponding cache has a copy of the line in question. For each entry there is also a dirty bit in the directory. When it is set it means that only 1 cache has the corresponding line, and that cache is the only owner of that line. In every cache each line also has a local valid bit, indicating the validity of the cache line, as well as a local dirty bit, indicating that the cache is the sole owner of the line or not. A core wishing to make a memory access may need to query the directory about the state of the line to be accessed.

Directory Protocol

Similarly to what we did for MSI, let's have a look at what happens upon core read/write operations in various scenarios, considering a dual-core CPU.

Read Hit in Local Cache. In that case a core wants to read some data, and it's present in the cache, indicated with the valid bit. There is no need to contact the directory and the core just reads from the cache:

Read miss in Local Cache. In case of a read miss (i.e. data is not present in the cache or the corresponding local valid bit is unset), the directory is consulted and the actions taken depend what happens in this scenario depends on the value of the directory dirty bit for that value.

- If the directory dirty bit is unset, first we consult the directory to see if another cache has the line in question: if that is the case, the line can be retrieved from that other cache. If no other cache has the line, it can be safely fetched from memory. The directory bit corresponding to the reading core is then set to 1, as well as the local valid bit in the cache of the reading core.

The two scenarios for a read miss in local cache with directory bit unset are illustrated below:

- If the directory dirty bit is set, we know that another cache has the last version of the cache line, and that it is the one and only owner of the line. So we force that core to sync with memory, and have it also send the line to the reading core. We can clear the directory dirty bit, as is no exclusive owner for that cache line anymore. The local valid bit is also set in the reading core, as well as the present bit in the directory for that core. This is illustrated below:

Write hit in Local Cache with Local Dirty Bit Set. In that case, we know the line in cache is valid, and that the reading core is the sole owner of that line: the write can be performed directly in the cache:

Write Hit in Local Cache with Local Dirty Bit Unset. In that case the writing core is not the sole owner of the cache line, so it consults the directory to know which caches have the line and sends invalidate messages to them. The corresponding bits in the directory can then be cleared. As the writing core is now the sole owner of the line, the local dirty bit is set and the directory dirty bit is set too.

Write Miss in Local Cache. Here again the actions to take depends on the value of the directory dirty bit for the cache line written.

- If the directory dirty bit is unset, the directory present bits are consulted to see if any cache has the line. If that is the case, the cache line is retrieved from the corresponding remote cache, and the writing core also sends an invalidate message to any remote cache having the line. If no remote cache has the line, it is fetched from memory. The present bit is set in the directory for the writing core, and the directory dirty bit is also set. Finally, the local dirty bit is set in the writing core.

This is illustrated below:

- If the directory dirty bit is set, it means that another core has the exclusive last version of the data. The writing core sends a message to the remote core, which updates memory and sends the cache line to the writing core. The writing core performs the write operation and sets its local dirty bit. In the directory, the dirty bit stays set because we still have an exclusive owner. However, the owner is now the writing core, so we set the presence bits accordingly.

This is illustrated below:

Analysis, NUMA Systems

We described a directory-based protocol that is roughly equivalent to the bus-based MSI protocol. There are multiple optimisations possible, but we won't go into details. The important thing to note is that, even if directory-based coherency is designed to scale to more cores than snooping, having a single directory centralising coherency metadata is a serious bottleneck. So the solution is to distribute this metadata, and have multiple directories, each taking care of a subset of the memory address space. This is often coupled with a distributed memory structures where part of the memory is physically local to the processor, and part is remote. This is particular to medium and large multiprocessor systems that have multiple CPU chips. The latency to access local and remote memory is different in these systems, and we talk about Non-Uniform memory Access, NUMA systems.

Here is an example of such system:

In this example we have 2 sockets, which means 2 processor chips, interconnected, so they can operate on a single shared address space. Part of the physical memory is local to socket 1 and part is local to socket 2. We also have 2 directories. Access non-local memory takes more time.

Drawbacks

Directory-based coherency is not a panacea and there are a few drawbacks. Without a common bus network many of the previous communications will take a significant number of CPU cycles. In the presence of long and possibly delays such protocols usually require replies to messages, handshakes, to work correctly, and many doubt that it can be made to work efficiently for heavily shared memory applications. Some machines that used directory-based coherency include SGI Origin, as well as the Intel Xeon Phi.

Synchronisation in Parallel Programming - Locks and Barriers

You can access the slides for this lecture here. All the code samples given here can be found online, alongside instructions on how to bring up the proper environment to build and execute them here.

We have seen previously how to program a shared memory processor using threads, execution flows that share a common address space which is very practical for communications. However, the examples we have seen were extremely simple: there was no need for threads to synchronise, apart from the final join operation. There was also no shared data between threads. Today we are going to see mechanisms that make synchronisation and data sharing (especially in write mode) possible.

Synchronisation Mechanisms

In a multithreaded program the threads are rarely completely independent: they need to wait for each other at particular points during the computations, and they need to communicate by sharing data, in particular in write mode (e.g. one thread writes a value in memory that is supposed to be read or updated by another thread). To allow all of this we use software constructs named synchronisation mechanisms. Here we will cover two mechanisms: barriers, that let threads wait for each other, and locks, allowing threads to share data safely. In the next lecture we will cover condition variables, that lets threads signal the occurrence of events to each other.

Barriers

A barrier allows selected threads to meet up at a certain point in the program execution. Upon reaching the barriers, all threads wait until the last one reaches it.

Consider this example:

We have the time flowing horizontally. Thread 2 reaches the barrier first, and it starts waiting for all the other threads to reach that point. Thread 1, then 3 do the same. When thread 4 reaches the barriers, it's the last one, so all threads resume execution.

Barriers are useful in many scenarios. For example with data parallelism, assuming an application is composed of multiple phases or steps. An example could be a first step in which threads first filter the input data, based on some rule, and then in a second step the threads perform some computation on the filtered data. We may want to have a barrier to make sure that the filtering step is finished in all threads before any starts the computing step:

Another use case is when, because of data dependencies, we can parallelise only a subset of a loop's iterations at a time. Recall the example from the lecture on shared memory programming. We can put a barrier in a loop to ensure that all the parallel iterations in one step are computed before going to the next step:

Barriers are very natural when threads are used to implement data parallelism@ we want the whole answer from a given step before proceeding to the next one.

Barrier Example

Let's write a simple C program using barriers, with the POSIX thread library. We will create 2 threads, which behaviour is illustrated below:

Each thread performs some kind of computations (green part). Then each thread reaches the barrier, and prints the fact that it has done so on the standard output. We will make sure that the amount of computations in one thread (thread 1) is much larger than the amount in the other thread (thread 2), so we should see thread 1 printing the fact that it has reached the barrier before thread 2 does so. Once the two threads are at the barrier, they should both resume execution, and they should print out the fact that they are past the barrier approximately at the same time. We'll repeat all that a few time in a loop.

This is the code for the program (you can access and download the full code here).

#include <stdio.h>

#include <stdlib.h>

#include <pthread.h>

#include <unistd.h>

/* the number of loop iterations: */

#define ITERATIONS 10

/* make sure one thread spins much longer than the other one: */

#define T1_SPIN_AMOUNT 200000000

#define T2_SPIN_AMOUNT (10 * T1_SPIN_AMOUNT)

/* A data structure repreenting each thread: */

typedef struct {

int id; // thread unique id

int spin_amount; // how much time the thread will spin to emulate computations

pthread_barrier_t *barrier; // a pointer to the barrier

} worker;

void *thread_fn(void *data) {

worker *arg = (worker *)data;

int id = arg->id;

int iteration = 0;

while(iteration != ITERATIONS) {

/* busy loop to simulate activity */

for(int i=0; i<arg->spin_amount; i++);

printf("Thread %d done spinning, reached barrier\n", id);

/* sync on the barrier */

int ret = pthread_barrier_wait(arg->barrier);

if(ret != PTHREAD_BARRIER_SERIAL_THREAD && ret != 0) {

perror("pthread_barrier_wait");

exit(-1);

}

printf("Thread %d passed barrier\n", id);

iteration++;

}

pthread_exit(NULL);

}

int main(int argc, char **argv) {

pthread_t t1, t2;

pthread_barrier_t barrier;

worker w1 = {1, T1_SPIN_AMOUNT, &barrier};

worker w2 = {2, T2_SPIN_AMOUNT, &barrier};

if(pthread_barrier_init(&barrier, NULL, 2)) {

perror("pthread_barrier_init");

return -1;

}

if(pthread_create(&t1, NULL, thread_fn, (void *)&w1) ||

pthread_create(&t2, NULL, thread_fn, (void *)&w2)) {

perror("pthread_create");

return -1;

}

if(pthread_join(t1, NULL) || pthread_join(t2, NULL))

perror("phread_join");

return 0;

}

This code declares a data structure worker representing a thread.

It contains an integer identifier id, another integer spin_amount representing the amount of time the thread should spin to emulate the act of doing computations, and a pointer to a pthread_barrier_t data structure representing the barrier the thread will synchronise upon.

The barrier is initialised in the main function with pthread_barrier_init.

Notice the last parameter that indicates the amount of threads that will be waiting on the barrier (2).

An instance of worker is created for each thread in the main function, and the relevant instance is passed as parameter to each thread's function thread_fn.

The threads' function starts by spinning with a for loop.

As previously described the amount of time thread 2 spins is much higher than for thread 1.

The threads meet up at the barrier by both calling pthread_barrier_wait.

Locks: Motivational Example 1

We need locks to protect data that is shared between threads and that can be accessed in write mode by at least 1 thread. Let's motivate why this is very important. Assume that we have a cash machine, which supports various operations and among them cash withdrawal by a client. This is the pseudocode for the withdrawal function:

int withdrawal = get_withdrawal_amount(); /* amount the user is asking to withdraw */

int total = get_total_from_account(); /* total funds in user account */

/* check whether the user has enough funds in her account */

if(total < withdrawal)

abort("Not enough money!");

/* The user has enough money, deduct the withdrawal amount from here total */

total -= withdrawal;

update_total_funds(total);

/* give the money to the user */

spit_out_money(withdrawal);

First the cash machine queries the bank account to get the amount of money in the account. It also gets the amount the user wants to withdraw from some input. The machine then checks that the user has enough money to satisfy the request amount to withdraw. If not it returns an error. If the check passes, the machine compute the new value for the account balance and updates it, then spits out the money.

This all seems fine when there is only one cash machine, but consider what happens when concurrency comes into play, i.e. when we have multiple cash machines.

Let's assume we now have 2 transactions happening approximately at the same time from 2 different cash machines.

This could happen in the case of a shared bank account with multiple credit cards for example.

We assume that there is £105 on the account at first, and that the first transaction is a £100 withdrawal while the second is a £10 withdrawal.

One of these transactions should fail because there is not enough money to satisfy both: 100 + 10 > 105.

A possible scenario is as follows:

- Both threads get the total amount of money in the account in their local variable

total, both get 105. - Both threads perform the balance check against the withdrawal amount, both pass because

100 < 105and10 < 105. - Thread 1 then updates the account balance with

105-100 = 5and spits out £100. - Then thread 2 updates the account, with

105 - 10 = 95and spits out £10.

A total of £110 has been withdrawn, which is superior to the amount of money the account had in the first place. Even better, there is £95 left on the account. We have created free money!

Of course this behaviour is incorrect. It is called a race condition, when shared data (here the account balance) is accessed concurrently in write mode by at least 1 thread (here it is accessed in write mode by both cash machines i.e. threads). We need locks to solve that issue, to protect the shared data against races.

Locks: Motivational Example 2

Let's take a second, low-level example. Consider the i++ statement in a language like java or C. Let's assume that the compiler or the JVM is transforming this statement into the following machine instructions:

1. Load the current value of i from memory and copy it into a register

2. Add one to the value stored into the register

3. Store from the register to memory the new value of i

Let's assume that i is a global variable, accessible from 2 threads running concurrently on 2 different cores.

A possible scenario when the 2 threads execute i++ approximately at the same time is:

In this table time is flowing downwards.

Thread 1 loads i in a register, it's 7, then increments it, it becomes 8, and then stores 8 back in memory.

Next, thread 2 loads i, it's 8, increment it to 9, and stores back 9.

This behaviour is expected and correct.

The issue is that there are other scenarios possible, for example:

Here, both threads load 7 at the same time. Then they increment the local register, it becomes 8 in both cores And then they both store 8 back. This behaviour is not correct: once again we have a race condition because the threads are accessing a shared variable in write mode without proper synchronisation.

Note that this race condition can also very well happen on a single core where the threads' execution would be interlaced.

Critical Sections

The parts of code in a concurrent program where shared data is accessed are called critical sections. In our cash machine example, we can identify the critical section as follows:

int withdrawal = get_withdrawal_amount();

/* critical section starts */

int total = get_total_from_account();

if(total < withdrawal)

abort("Not enough money!");

total -= withdrawal;

update_total_funds(total);

/* critical section ends */

spit_out_money(withdrawal);

For our program to behave correctly without race conditions, the critical sections need to execute:

- Serially, i.e. only a single thread should be able to run a critical section at a time;

- Atomically: when a thread starts to execute a critical section, the thread must first finish executing the critical section in its entirety before another thread can enter the critical section.

A lock is a synchronisation primitive enforcing the serialisation and atomicity of critical sections.

Locks

Each critical section is protected by its own lock. Threads wishing to enter the critical section try to take the lock and:

- A thread attempting to take a free lock will get it.

- Other threads requesting the lock wait until the lock is released by its holder.

Let's see an example: we have two threads running in parallel. They both want to execute a critical section approximately at the same time. Both try to take the lock. Let's assume thread 1 tried slightly before thread 2 and gets the lock, it can then execute the critical section while thread 2 waits:

Once it has finished executing the critical section, thread 1 releases the lock. At that point thread 2 tries to take the lock again, succeeds, and start to execute the critical section:

When thread 2 is done with the critical section, it finally releases the lock:

With the lock, we are ensured that the critical section will always be executed serially (i.e. by 1 thread at a time) and atomically (a thread starting to execute the critical section will finish it before another thread enter it).

Pthreads Mutexes

A commonly used lock offered by the POSIX thread library is the mutex, which stands for mutual exclusion lock.

After it is initialised, its use is simple: just enclose the code corresponding to critical sections between a call to pthread_mutex_lock and pthread_mutex_unlock:

#include <pthread.h>

pthread_mutex_t mutex;